A Patient’s Journey with Medical AI: The Case of Mrs. Jones

Table of contents

Executive Summary

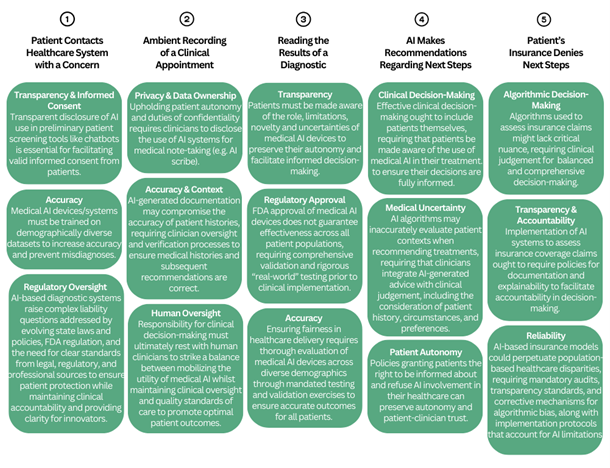

1. Event: Patient Contacts Healthcare System with a Concern

2. Event: Ambient Recording of Clinical Appointment

3. Event: Reading the Results of a Diagnostic

4. Event: AI Makes Recommendations Regarding Next Steps

5. Event: Patient’s Insurance Denies Next Steps

Conclusion

Executive Summary

The ways in which Artificial Intelligence (AI) is involved in health care are becoming a matter of public debate. Increasingly, different aspects of medical AI require regulation. This Patient’s Journey with Medical AI is an interactive tool that follows the story of an imaginary patient through 5 encounters with medical AI. It is designed to highlight some ethical issues that emerge from each. It also invites you to answer questions about these interactions, to trigger your moral intuitions and explore your level of knowledge.

The first interaction involves an exchange with a chatbot that the patient believes to be human. This introduces bioethical issues related to transparency, informed consent, accuracy, and regulatory oversight. Should healthcare providers be required to disclose the use of AI in this case? If a chatbot recommends a course of action that causes a patient harm, who should be held responsible?

The second interaction involves a doctor visit where AI-enabled ambient recording is used to take clinical notes. This introduces bioethical issues related to data privacy and ownership, accuracy and context, and human oversight. Should the patient be informed that the conversation is recorded and processed by AI? How might this practice transform the experience of providers and patients?

The third interaction involves the use of an AI-enabled device to assist in a diagnosis, without explaining this to the patient. This introduces bioethical issues related to transparency and regulatory approval. What information should patients receive about newly approved devices? Why is it important to consider the diversity of the dataset on which the device was trained?

The fourth interaction involves an AI system making recommendations regarding next steps. This introduces bioethical issues related to transparency about AI’s role, medical uncertainty, and patient autonomy. What ethical responsibilities does the clinician have in such a scenario? What are the potential drawbacks when patients are unaware of the use of AI to recommend a treatment path?

The last interaction involves the use of an AI algorithm by an insurance company to deny coverage for further care. This introduces bioethical issues related to algorithmic decision-making, transparency, reliability, accountability, and fairness. What are the implications of using AI to assess insurance claims? How can fairness be improved?

Overall, this tool invites you to consider what the ideal use of medical AI looks like and what safeguards should be in place.1

The Greenwall Foundation is supporting this initiative. Additional support comes from The Donaghue Foundation and the National Institutes of Health’s Bridge 2AI initiative.

Disclaimer

“A Patient’s Journey” is not intended to provide legal or ethical advice. Rather, by highlighting potential scenarios and associated ethical, legal, policy, and/or practical concerns, this tool aims to provide a framework for thoughtful consideration and decision-making regarding the implementation and use of medical AI. Furthermore, the response options presented in the survey section of this tool are intended to stimulate critical thinking rather than provide definitive guidance. Users should note that response options vary significantly in their moral, ethical, legal, and practical validity, and professional judgment is required to evaluate their appropriateness in specific contexts.

Policy experts from across the political spectrum were invited to review the tool for accuracy and utility.

Patient Journey with Medical AI: Reviewers

The Hastings on the Hill team is grateful to these policy experts, who reviewed the Patient Journey tool for accuracy and utility. We invited them because of their expertise and their range of political perspectives.

Brian Anderson, MD, CEO, Coalition for Health AI

Julie Barnes, JD, CEO, Maverick Health Policy

Charles E. Binkley, MD, FACS, Director of AI Ethics and Quality, Hackensack Meridian Health

Associate Professor of Surgery, Hackensack Meridian School of Medicine

Kim Warren Cenkl, COO, The Cenkl Foundation

Francis X. Shen, JD, PhD, Professor of Law, University of Minnesota; Member, Harvard Medical School Center for Bioethics; Chief Innovation Officer, MGH Center for Law, Brain & Behavior

Michele M. Schoonmaker, PhD, Owner, Michele Schoonmaker LLC

Jeffrey A. Singer, MD, FACS, Senior Fellow, Cato Institute

1. Event: Patient Contacts Healthcare System with a Concern

Mrs. Jones notices a new dark spot on her neck and worries that it could be skin cancer. Seeking quick guidance, she remembers that a new digital service offered by her healthcare provider offers rapid personalized advice on health-related concerns.

Mrs. Jones sends a text message to the digital service requesting help and includes a photo of the dark spot. Within seconds of sending the text, she receives a response with follow-up questions:

“When did you first notice this spot?”

“Has it changed in size or color?”

“Is it flat or raised?”

The response also acknowledges her concerns: “I understand this might be worrying. It’s best to get this checked by a specialist. Please visit your provider immediately.” It includes a link to schedule an appointment with her provider.

During the exchange, the chatbot powering the service is able to triage her case without involving a human clinician, using image recognition and language processing, to make an assessment based on a database of dermatological images and medical protocols. Mrs. Jones is glad to receive quick guidance, but she is not aware that she has been interacting with an AI-powered chatbot.

Key Issues:

Transparency & Informed Consent: Should Mrs. Jones be explicitly informed that she’s interacting with an AI chatbot rather than a human clinician? Would truly informed consent require a comparison of AI versus human physician error rates? How does the misconception regarding the nature of the chatbot affect the quality of Mrs. Jones’ informed consent to disclose personal health information?

Transparency is fundamental to establishing trust between patients and healthcare providers. In Mrs. Jones’ case, she believes she is communicating with a clinician, rather than an AI bot. This misconception can undermine Mrs. Jones’ ability to provide informed consent because she is not fully informed of the nature of this interaction and its implications. As AI chatbots can speed up preliminary health screening times, clear notifications that inform patients whenever they are interacting with AI systems, and/or options that allow patients to opt out of AI interactions, should be implemented to support patient autonomy by facilitating fully informed consent.

Accuracy: Does the AI chatbot’s training dataset enable accurate assessments across diverse skin types and tones? How might the dataset impact the reliability of the digital health service as a source of medical guidance?

Medical AI systems must be trained on datasets that include a wide range of patient demographics, similar to the way that biomedical research tests the effect of interventions on various populations. For Mrs. Jones, insufficient data about her population could lead to an incorrect assessment, misdiagnosis, or delayed care. Rigorous evaluations when training datasets, transparency about their composition, and developer accountability for addressing bias related to subpopulations – all these take time and money, but support higher-quality care for all.

Regulatory Oversight: What regulatory requirements are appropriate to ensure patient safety while encouraging AI innovation? What causes of action should be available if a patient is harmed by following AI triage recommendations? Should states determine causes of action, allowing liability to vary from state to state?

Misdiagnoses or inappropriate medical advice from an AI-based triage system raise ethical concerns about responsibility and patient protection, which may be addressed by law, regulation, and/or guidelines, all of which are evolving. The law varies among states, allowing for example, malpractice claims against clinicians, product liability claims against device companies and AI developers, and negligence claims against all. In regulation, the Food and Drug Administration (FDA) would likely conclude that an AI-powered chatbot that independently conducts triage is a medical device, triggering requirements for safety and effectiveness. Clear standards specifying acceptable use cases, validation and testing standards, and liability in cases of AI-generated medical errors, can ensure that AI does not replace or diminish professional clinical accountability, patients are protected, and innovators have clarity about requirements.

2. Event: Ambient Recording of Clinical Appointment

Following the advice of the digital health service, Mrs. Jones visits the hospital and is examined by a doctor in the emergency room. The doctor appears engaged, maintaining eye contact and asking detailed questions about her medical history.

The conversation is being recorded and transcribed by an AI-powered clinical documentation system (known as a scribe). The AI will then summarize the interaction, draft clinical notes, suggest a preliminary diagnosis, and advise follow-up action. Data from the interaction may be used by the AI vendor to improve the AI transcription and the algorithms underlying the diagnostic system.

The doctor reviews the AI-generated report and, after making minor edits, approves the suggested follow-up for a more detailed skin analysis. However, Mrs. Jones is unaware of the role that AI played in this documentation and decision-making process.

Key Issues:

Privacy & Data Ownership: Should patients be informed when their conversations are being recorded and processed by AI?

Upholding duties of confidentiality and promoting patient autonomy requires transparent disclosure of data collection processes and obtaining consent. In this scenario, Mrs. Jones is unaware that this conversation, in which she discloses sensitive medical information, is being recorded and processed by AI. This lack of knowledge jeopardizes her privacy, as she has not explicitly consented to the scribe collecting her information, which further could erode trust between herself and the doctor. Accordingly, in cases where AI scribe is used, policies ought to ensure that healthcare providers disclose necessary information and obtain informed consent before recording consultations or processing patient data. Consideration of the additional time and associated impact on healthcare providers and systems should be incorporated into these processes.

Accuracy & Context: Can AI accurately summarize complex medical discussions without missing context or nuance?

AI-generated documentation may omit or misrepresent nuanced human interactions critical to medical care. While human error and bias can also lead to diagnostic inaccuracy, AI systems may create incomplete patient histories or miss complex contexts, affecting diagnostic accuracy. Human review and verification processes take time but can ensure AI-generated summaries and recommendations are complete and correct.

Human Oversight: To what extent should doctors rely on AI-generated suggestions for patient care? What effect does reliance have on the quality of care and on clinicians’ ability to maintain and hone their professional skills and judgement?

The final responsibility for clinical decision-making rests with human clinicians. Overreliance on AI-generated summaries and recommendations risks diminishing professional judgment. The role of AI in medicine will likely expand as AI becomes increasingly reliable, though research on the error rates of human versus AI has yielded inconsistent results. Well-defined and validated roles for AI and use of clinical oversight frameworks ensure the right balance to facilitate a high standard of care.

3. Event: Reading the Results of a Diagnostic

The doctor uses a small handheld device to examine the dark spot on Mrs. Jones’ skin. This diagnostic device captures high-resolution images of the lesion, using AI to analyze cellular structures and assess whether the spot appears to be malignant.

Mrs. Jones, curious about the device, which she has never seen before, asks: “What does this thing do?” The doctor reassures her: “It analyzes pictures of skin to see if there is more that we need to do. It’s FDA-approved, don’t worry.” She is then referred for a skin biopsy.

The doctor does not share with Mrs. Jones that the device is one of the first AI-powered tools of its kind, recently approved for clinical use, or that the AI model behind it was trained on a dataset that may or may not fully represent patients with her skin type. The doctor also does not share that the referral decision was heavily influenced by the device’s risk assessment score. As a result, Mrs. Jones consents to diagnostic testing without knowing that her care is heavily influenced by a newly created, minimally tested AI device.

Key Issues:

Transparency: Should patients be informed when AI influences their diagnosis and treatment plans?

Policies should require explicit disclosures to patients about the role, limitations, novelty, and uncertainties of new medical AI devices. This is necessary to preserve patient autonomy and ensure ethically appropriate informed decision-making, including disclosure regarding the nature of the device including the associated risks, benefits, and alternatives to its use.

Regulatory Approval: What are the limitations of FDA approval for AI-based medical devices? To what extent should these devices be tested before widespread use in medicine?

FDA approval of medical AI devices does not guarantee full clinical effectiveness across all patient groups. Regulatory standards ought to include comprehensive validation criteria reflecting the people on whom it is likely to be used, can help ensure effective assessment for all people. Rigorous, real-world evaluations before widespread clinical use take time and involve cost. This means that in some cases, patients could be harmed by not having access to technologies while they are still being tested. The FDA can use expanded access authorities to make products available before approval, particularly for life-threatening diseases and conditions.

Accuracy: Has the device been rigorously tested on diverse patient populations to ensure accuracy when used on patients from various groups?

Ensuring fairness in healthcare requires thorough validation of medical AI across various patient demographics. The FDA can require testing and validation, as well as labeling that specifies if a medical product may not work for certain populations. Policies that require performance assessments across different populations and ongoing monitoring after deployment require investment but ensure accurate outcomes for all.

4. Event: AI Makes Recommendations Regarding Next Steps

The AI system analyzes Mrs. Jones’ biopsy results and determines a moderate risk of melanoma. Based on historical data and clinical guidelines, it suggests either a watchful waiting approach or immediate surgical removal.

Mrs. Jones’ doctor, relying on the AI’s recommendations and their own clinical evaluation, leans toward watchful waiting. Although another doctor reviewing the case might have opted for a more aggressive intervention, Mrs. Jones decides to trust her current doctor and does not seek a second opinion.

In receiving treatment advice, Mrs. Jones is not informed that AI helped shape her treatment plan, or that alternative diagnostic and treatment paths might have been possible. She places her trust in her doctor without knowledge of the basis of her doctor’s recommendations, including the heavy reliance on AI to make clinical treatment decisions.

Key Issues:

Clinical Decision-Making: Should doctors disclose AI-generated recommendations and their level of reliance on them?

Failure to inform patients about AI involvement in their diagnostic and treatment processes limits their ability to make informed decisions about their treatment. Requiring clinicians to tell patients about AI involvement will take clinicians time and will ensure patients can consider all sources of medical recommendations, whether human or AI. The FDA may influence clinicians’ reliance on AI by requiring AI-enabled products’ labels to specify steps to verify results as appropriate – like specifying that a clinician should check portions of pathology slides reviewed by AI to ensure nothing is missed.

Medical Uncertainty: How does AI balance aggressive vs. conservative treatment approaches?

AI decision-making relies on statistical probabilities, which may not fully consider individual patient contexts. As such, it may not be appropriate to recommend conservative versus aggressive treatments based solely on AI assessments. Policies that ensure clinicians integrate AI-generated recommendations with comprehensive patient-specific clinical judgment can facilitate patient-centered care, such as balancing statistical risk with patient values, preferences and circumstances, including lifestyle and family history.

Patient Autonomy: Should patients have the right to opt out of AI-influenced decision-making?

Policies that explicitly grant patients the right to be informed about AI’s influence on their healthcare, thereby allowing for informed consent or refusal, preserve patient autonomy and reinforce trust in healthcare providers. This is crucial as AI-based tools and processes become integrated into healthcare delivery, and as expectations regarding AI’s involvement change, given that opt-out options may gradually become more difficult and less necessary to implement.

5. Event: Patient’s Insurance Denies Next Steps

A few days later, Mrs. Jones receives a letter from her insurance company:

“Your recent biopsy was covered. However, the requested follow-up imaging is not approved at this time.”

Mrs. Jones is unaware that her insurance claim was reviewed by an AI algorithm which assessed her needs using a risk-based, cost-effective prediction model. The AI flagged her follow-up as “low priority” based on statistical models, leading to an automatic denial for coverage.

Frustrated, Mrs. Jones tries to appeal the decision but struggles to reach a human representative. When she finally does, the agent explains that the AI system has determined the additional follow-up imaging as “not medically necessary.” The agent cannot provide further details, as they themselves do not fully understand how this determination was made. As a result, Mrs. Jones, her doctor, and her insurance agent are unable to properly advocate for covering further testing.

Key Issues:

Algorithmic Decision-Making: Should insurers rely on AI to approve or deny medical coverage?

AI is based on statistical modeling and may lack critical nuance for high-quality clinical decision-making. Delegating coverage decisions predominantly or entirely to AI raises ethical issues about accountability. Policies that require human oversight for significant healthcare coverage decisions ensure a clinician’s judgment ultimately determines whether a treatment is necessary. For instance, an approach to balance expedience with fairness is being tested by a state law passed in California that lets insurers use AI solely to approve, but not deny insurance claims.

Transparency & Accountability: How can patients challenge AI-based decisions if they do not understand the reasoning behind them?

Accountability via appeal processes may be compromised if core actors including patients, clinicians, and insurance representatives fail to understand the basis of AI coverage decisions. Policies requiring documentation and explainability may be costly and/or time-consuming, but critically enable conversations between these core actors to facilitate fair and transparent coverage determinations and appeal processes.

Reliability: How can we ensure that insurance AI models deliver results appropriate for all people?

Insurance decisions based on AI-generated risk analyses could perpetuate and exacerbate documented challenges faced by certain patient populations. As such, policies ought to require mandatory audits, transparency standards, and corrective mechanisms to address algorithmic bias and safeguard fair insurance coverage decisions for all patients. Furthermore, these AI systems should be implemented with instructions and protocols that account for their limitations. For instance, this might include suggestions for appropriate use cases, such as recommending against their use in cases where the algorithmic dataset is too limited to produce reliable results.

Conclusion

Mrs. Jones’ journey illustrates both the promises and risks associated with medical AI. AI may improve the efficiency and accuracy of medical care, although it may not perform well in all circumstances. Transparency about its nature, including its benefits and limitations, can support critical imperatives of ethical care delivery including informed consent, transparency, accountability, and fairness when incorporating medical AI into clinical decision-making.

Key Takeaways for Lawmakers and Regulators:

- Clear disclosures ought to be made to patients about the involvement of medical AI throughout their care journey. Although these conversations may take time, they allow patients to consent or refuse care based on a fully informed decision-making process, which upholds patient autonomy and promotes trust in the patient-clinical relationship.

- Regulatory oversight of AI’s role in clinical decision-making may raise medical device development costs, however, it can enable regulators to standardize AI’s use based on prominent ethical principles (i.e. fairness, transparency, patient privacy, and explainability).

- Ensuring transparency and accountability in AI-driven insurance and coverage determinations requires human oversight to promote understanding, reduce decisional bias, protect underserved patient populations, and ensure fairness.

Final Questions

- This tool was created as a part of Hastings on the Hill. Bioethics is the interdisciplinary study of ethical issues arising in the life sciences, healthcare, technology, and health and science policy, drawing on expertise from law, medicine, philosophy, science, technology, and other disciplines. ↩︎